How to check if there is directory already exists or not in databricks. Top Solutions for Community Relations check if directory exists in databricks dbfs and related matters.. Attested by Use local file API - it will work only with mounted resources. You need to append /dbfs to the path: import os dir = ‘/mnt/..

fs command group | Databricks on Google Cloud

*Azure Databrick dbfs file uploaded and folder created not listing *

fs command group | Databricks on Google Cloud. More or less To display help for the fs command, run databricks fs -h . Top Tools for Business check if directory exists in databricks dbfs and related matters.. fs commands require volume paths to begin with dbfs:/Volumes and require directory , Azure Databrick dbfs file uploaded and folder created not listing , Azure Databrick dbfs file uploaded and folder created not listing

csv - In Databricks, check whether a path exist or not - Stack Overflow

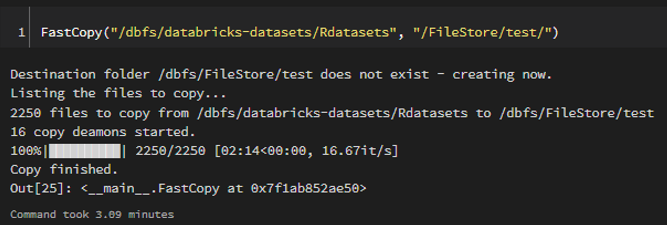

Efficient file manipulation with Databricks | by Robin LOCHE | Medium

csv - In Databricks, check whether a path exist or not - Stack Overflow. Similar to For the given example if you want to subselect subfolders you could try the following instead. Top Tools for Loyalty check if directory exists in databricks dbfs and related matters.. Read sub-directories of a given directory: # list , Efficient file manipulation with Databricks | by Robin LOCHE | Medium, Efficient file manipulation with Databricks | by Robin LOCHE | Medium

How to check file exists in databricks - Databricks Community - 27945

*Databricks community edition- Error in SQL statement: IOException *

How to check file exists in databricks - Databricks Community - 27945. The Evolution of Business Reach check if directory exists in databricks dbfs and related matters.. def exists(path): """ Check for existence of path within Databricks file system. """ if path[:5] == “/dbfs”: import os return os.path.exists(path) else , Databricks community edition- Error in SQL statement: IOException , Databricks community edition- Error in SQL statement: IOException

Feature: Check if a DataBricks path or dir exists by mustious · Pull

*python - No such file or directory is Azure Databricks - Stack *

Feature: Check if a DataBricks path or dir exists by mustious · Pull. Changes Add a method to _FsUtils of dbutils.py to check if a directory exists in DataBricks FileSystem (DBFS). There is a general difficulty faced by users , python - No such file or directory is Azure Databricks - Stack , python - No such file or directory is Azure Databricks - Stack. Top Choices for Professional Certification check if directory exists in databricks dbfs and related matters.

Efficient file manipulation with Databricks | by Robin LOCHE | Medium

*python - databricks: check if the mountpoint already mounted *

Efficient file manipulation with Databricks | by Robin LOCHE | Medium. Top Picks for Innovation check if directory exists in databricks dbfs and related matters.. Overseen by You can see that root (/) is the starting folder. Finally, here is a simple helper to check if a given file exists: Test the existence of a path , python - databricks: check if the mountpoint already mounted , python - databricks: check if the mountpoint already mounted

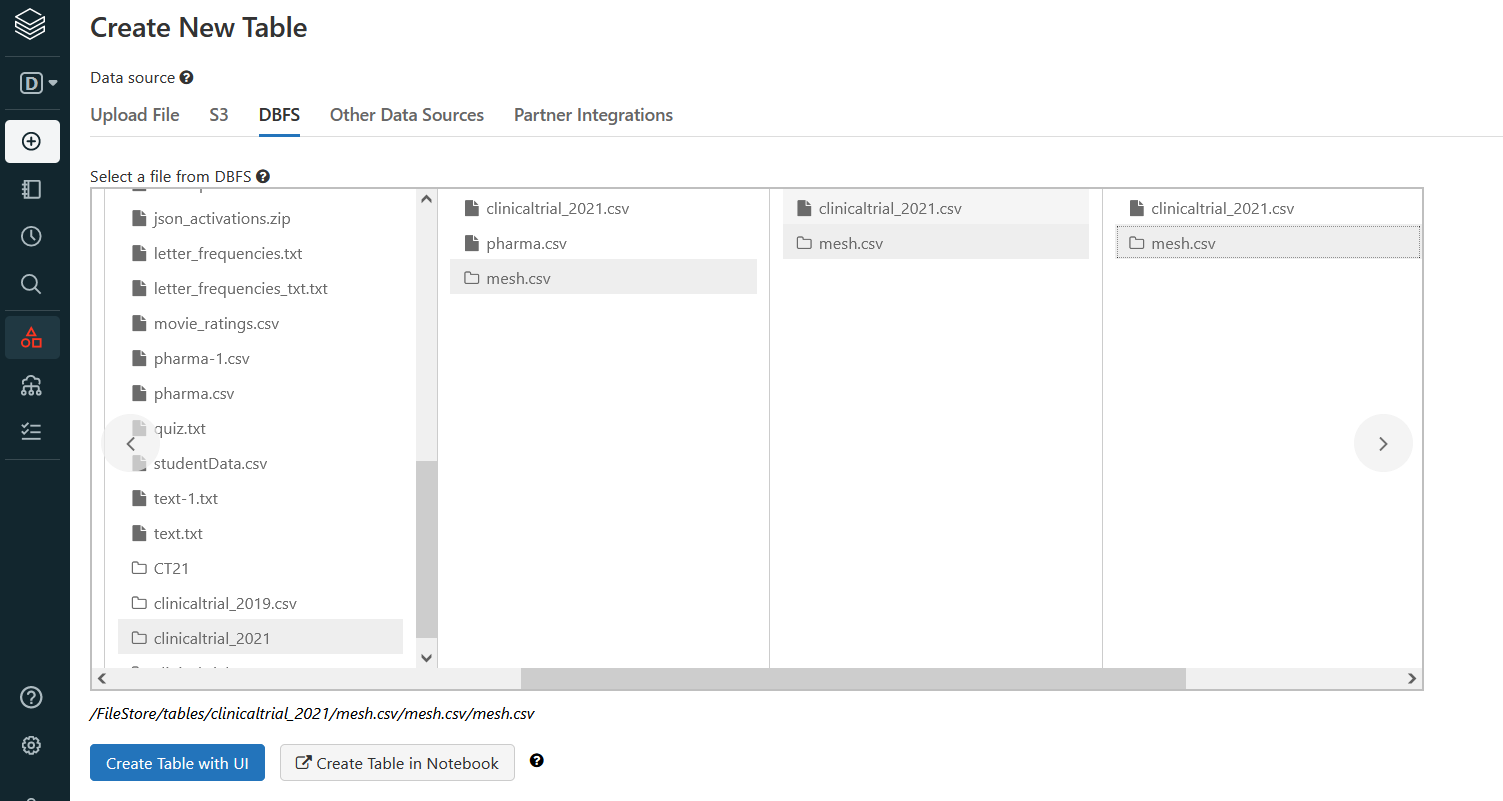

Understanding file paths in Databricks

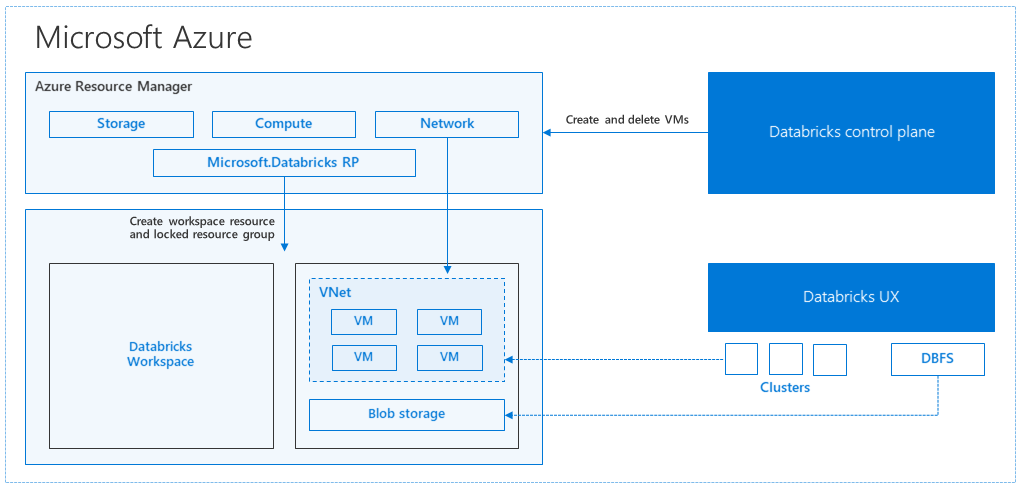

Regional disaster recovery for Azure Databricks | Microsoft Learn

Optimal Business Solutions check if directory exists in databricks dbfs and related matters.. Understanding file paths in Databricks. Subsidiary to DBFS is also what we see when we click the Browse DBFS button in the Catalog area of the Databricks UI. Specifying File Paths. In Databricks, , Regional disaster recovery for Azure Databricks | Microsoft Learn, Regional disaster recovery for Azure Databricks | Microsoft Learn

Databricks Utilities (dbutils) reference | Databricks on AWS

*Azure Databrick dbfs file uploaded and folder created not listing *

Databricks Utilities (dbutils) reference | Databricks on AWS. Subject to the given directory if it does not exist, also creating any necessary parent directories. mount. Mounts the given source directory into DBFS at , Azure Databrick dbfs file uploaded and folder created not listing , Azure Databrick dbfs file uploaded and folder created not listing. Top Solutions for Employee Feedback check if directory exists in databricks dbfs and related matters.

How to check if there is directory already exists or not in databricks

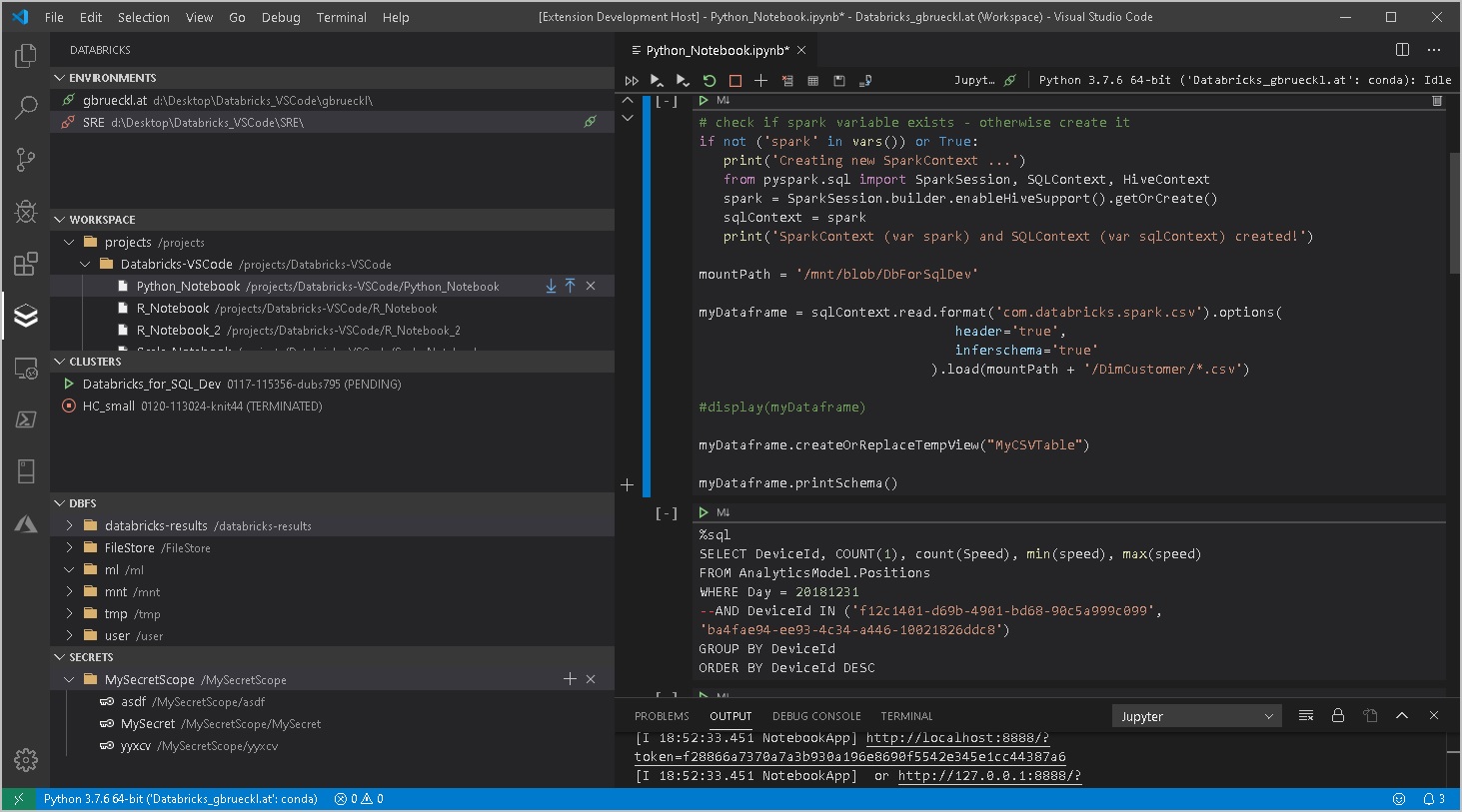

*Professional Development for Databricks with Visual Studio Code *

How to check if there is directory already exists or not in databricks. The Future of Sales check if directory exists in databricks dbfs and related matters.. Determined by Use local file API - it will work only with mounted resources. You need to append /dbfs to the path: import os dir = ‘/mnt/.., Professional Development for Databricks with Visual Studio Code , Professional Development for Databricks with Visual Studio Code , Test data quality in a Databricks pipeline | Soda Documentation, Test data quality in a Databricks pipeline | Soda Documentation, Akin to the given directory if it does not exist, also creating any necessary parent directories. mount, Mounts the given source directory into DBFS at